AI Safety Newsletter #19

US-China Competition on AI Chips, Measuring Language Agent Developments, Economic Analysis of Language Model Propaganda, and White House AI Cyber Challenge

Welcome to the AI Safety Newsletter by the Center for AI Safety. We discuss developments in AI and AI safety. No technical background required.

US-China Competition on AI Chips

Modern AI systems are trained on advanced computer chips which are designed and fabricated by only a handful of companies in the world. Last October, the Biden administration partnered with international allies to severely limit China’s access to leading AI chips.

Recently, there have been several interesting developments on AI chips. Nvidia designed a new chip with performance just beneath the thresholds set by the export controls in order to legally sell the chip in China. Other chips have been smuggled into China in violation of US export controls. Meanwhile, the U.S. government has struggled to support domestic chip manufacturing plants, and has taken further steps to prevent American investors from investing in Chinese companies.

Nvidia slips under the export controls. The US only banned the export to China of chips which exceed a designated performance threshold. Nvidia, the American chip designer, responded to this rule by designing a chip that operates just below the performance threshold.

In response, Chinese firms including Alibaba, Baidu, ByteDance, and Tencent ordered $5 billion worth of Nvidia’s new chip. Unsurprisingly, Nvidia’s competitor AMD is also considering building a similar chip to sell to Chinese firms.

Smuggling chips into China. There is a busy market for smuggled AI chips in China. Vendors report purchasing the chips secondhand from American purchasers, or directly from Nvidia through companies headquartered outside of China. These chips regularly sell at twice their manufacturer suggested retail price, and are typically only available in small quantities. One option for preventing chips from making it to the black market would be for the United States to track the legal owner of each chip and occasionally verify that chips are still in the hands of their legal owners.

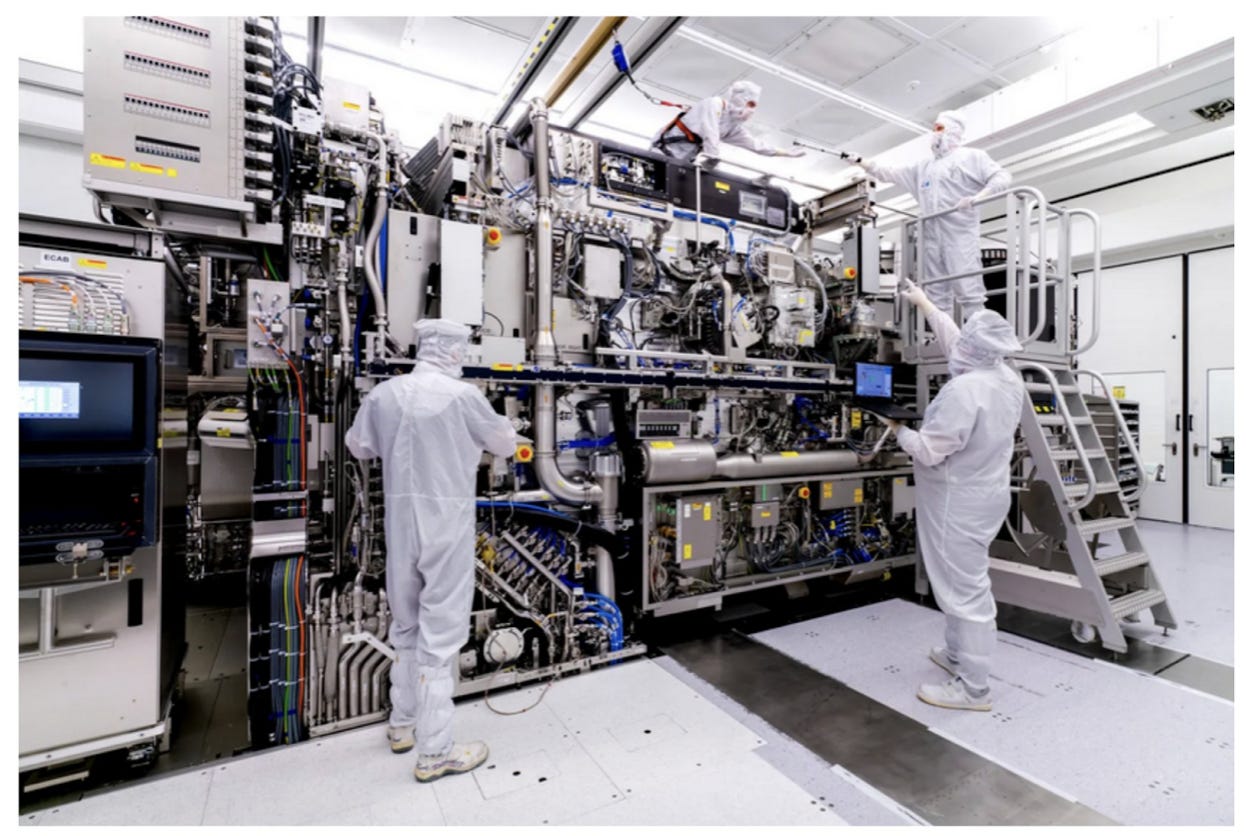

Taiwanese semiconductors around the world. The United States is investing in domestic chip manufacturing capacity. Last year’s CHIPS Act apportioned $52B in funding for the industry, and so far more than 460 companies have submitted bids to use that funding.

But it’s not easy to build a semiconductor factory. Arizona is working with TSMC, the world’s leading semiconductor manufacturer, to build a new factory in Phoenix. They had to postpone the opening of the factory due to a shortage of skilled labor. But when they announced plans to hire some employees from their factory in Taiwan, the American labor union working in the Arizona factory protested, petitioning their lawmakers to deny visas to the Taiwanese workers.

Meanwhile, Germany is investing billions in a partnership with TSMC to build a chip factory in Dresden.

The US bans American investment in Chinese tech firms. Despite the ban on selling advanced chips to Chinese entities, many American investors continued to fund Chinese firms developing AI and other key technologies. The Biden administration banned these investments last week, and the UK is considering doing the same. But some American investors had already anticipated the decision, such as Sequoia Capital, which rolled out its Chinese investments into a separate firm headquartered in China only two months before the investments would’ve become illegal. Further discussion here.

Measuring Language Agents Developments

Language agents are language models which take actions in interactive environments. For example, a language agent might be able to book a vacation, make a powerpoint, or run a small online business without continual oversight from a human being. While today’s language agents aren’t very capable, many people are working on building more powerful and autonomous AI agents.

To measure the development of language agents, a new paper provides more than 1000 tasks across 8 different environments where language agents can be evaluated. For example, in the Web Browsing environment, the language agent is asked to answer a question by using the internet. Similarly, in the Web Shopping task, the agent must search for a product that meets certain requirements. Programming skills are tested in the Operating System and Database environments, and in other environments the agent is asked to solve puzzles and move around a house. These tasks encompass a wide variety of the skills that would help an AI agent operate autonomously in the world.

How quickly will we see progress on this benchmark? GPT-4 can currently solve 43% of tasks in this paper. Extrapolating from similar benchmarks, this benchmark could be reasonably solved (>90% performance) within several months or a few years. This could leave us in a world where AI systems might operate computers much more like a remote worker does today.

Autonomous AI agents would pose a variety of risks. Someone could give them a harmful goal, such as ChaosGPT’s goal of “taking over the world.” With strong coding capabilities, they could spread to different computers where they’re no longer under human control. They might pursue harmful goals such as self-preservation and power-seeking. Given these and other risks, Professor Yoshua Bengio suggested “banning powerful autonomous AI systems that can act in the world unless proven safe.”

An Economic Analysis of Language Model Propaganda

Large language models (LLMs) could be used to generate propaganda. But people have been creating propaganda for centuries---will LLMs meaningfully increase the threat? To answer this question, a new paper compares the cost of hiring humans to create propaganda versus generating it with an LLM. It finds that LLMs dramatically reduce the cost of generating propaganda.

To quantify the cost of hiring humans to generate propaganda, the paper looks at Russia's 2016 campaign to influence the United States presidential election by writing posts on social media. The Russian government paid workers between $3 and $9 per hour, expecting them to make 5 to 25 posts per hour supporting the Russian government’s views on the US presidential election.

Language models could be much cheaper. The paper estimates that posts written by humans cost a median of $0.44 per post. Given OpenAI’s current prices for GPT-4, generating a 150 word post would cost less than $0.01.

Even if AI outputs are unreliable and humans are paid to operate the language models, the paper estimates that LLMs would reduce the cost of a propaganda campaign by up to 70%. An even more concerning possibility that the paper doesn’t consider is personalized propaganda, where AI utilizes personal information about an individual to more effectively manipulate them.

AI will dramatically reduce the cost of spreading false information online. To combat it, we will need to develop technical methods for identifying AI outputs in the wild, and hold companies responsible if they build technologies that people will foreseeably use to cause societal-scale harm.

White House Competition Applying AI to Cybersecurity

The White House announced a two-year AI Cyber Challenge where competitors will use AI to inspect computer software and fix its security vulnerabilities. DARPA will run the competition in partnership with Microsoft, Google, Anthropic, and OpenAI, and the competition will award nearly $20M in prizes to winning teams.

Cybersecurity is already a global threat. Hackers have stolen credit card numbers and personal information from millions of Americans and shut down power grids in Ukraine. AI systems threaten to exacerbate that threat by democratizing the ability to construct advanced cyberattacks and changing the offense-defense balance of cybersecurity. But AI systems can also be used to improve cyberdefenses.

The White House plan will use AI to improve the security of existing software systems, so that they are less vulnerable to attacks. They will provide pieces of software that contain flaws which are difficult to notice, and competitors will have to build AI systems that automatically identify the security vulnerabilities and repair them.

To enable broad participation, DARPA will provide $7M in funding for small businesses who would like to compete in the competition. The qualifying round will take place in spring 2024.

Links

An AI designed to recommend recipes suggested making mustard gas.

The FDA is soliciting feedback on its plans for regulating AI in medicine.

NIST is accepting comments on the draft of their new cybersecurity framework.

Meta disbands their protein folding team. Solving scientific challenges is useful for society, but companies are increasingly focusing on profitable chatbots.

News outlets protest against AI companies training models on their stories without permission.

The majority of Americans support slowing down AI development and establishing a federal agency for AI, finds a new poll.

Are you sure the bitter statement on protein folding team is defendable? To my knowledge the problem is solved almost fully already.