AI Safety Newsletter #52: An Expert Virology Benchmark

Plus, AI-Enabled Coups

Welcome to the AI Safety Newsletter by the Center for AI Safety. We discuss developments in AI and AI safety. No technical background required.

In this edition: AI now outperforms human experts in specialized virology knowledge in a new benchmark; A new report explores the risk of AI-enabled coups.

Listen to the AI Safety Newsletter for free on Spotify or Apple Podcasts.

An Expert Virology Benchmark

A team of researchers (primarily from SecureBio and CAIS) has developed the Virology Capabilities Test (VCT), a benchmark that measures an AI system's ability to troubleshoot complex virology laboratory protocols. Results on this benchmark suggest that AI has surpassed human experts in practical virology knowledge.

VCT measures practical virology knowledge, which has high dual-use potential. While AI virologists could accelerate beneficial research in virology and infectious disease prevention, bad actors could misuse the same capabilities to develop dangerous pathogens. Like the WMDP benchmark, the VCT is designed to evaluate practical dual-use scientific knowledge—in this case, virology.

The benchmark consists of 322 multimodal questions covering practical virology knowledge essential for laboratory work. Unlike existing benchmarks, these questions were deliberately designed to be "Google-proof"—requiring tacit knowledge that cannot be easily found through web searches. The questions were created and validated by PhD-level virologists and cover fundamental, tacit, and visual knowledge needed for practical work in virology labs.

Most leading AI models have already surpassed human experts in specialized virology knowledge. All but one frontier model outperformed human experts. The highest performing model, OpenAI’s o3, achieved 43.8% accuracy on the benchmark, significantly greater than the human expert average of 22.1%. Leading models even outperform human virologists in their specific area of expertise—for example, o3 outperformed 94% of virologists in subsets of questions representing their specific areas of expertise.

Publicly available AI systems should not have highly dual-use virology capabilities. The authors recommend that highly dual virology capabilities should be excluded from publicly-available systems, and know-your-customer mechanisms could ensure these capabilities remain accessible to researchers working in institutions with appropriate safety protocols.

They argue that “an AI’s ability to provide expert-level troubleshooting on highly dual-use methods should itself be considered a highly dual-use technology”—a standard that the paper shows already applies to many frontier AI systems. As a result of the paper, xAI has added new safeguards to their systems.

For more analysis, we also recommend reading Dan Hendrycks’ and Laura Hiscott’s article in AI Frontiers discussing implications of VCT.

AI-Enabled Coups

Researchers at the nonprofit Forethought have published a report on how small groups could use artificial intelligence to seize power. It discusses AI’s coup-enabling capabilities, factors increasing coup risk, potential pathways for AI-enabled coups, and possible mitigations.

AI may soon have coup-enabling capabilities. Future AI systems could surpass human experts in areas such as weapons development, controlling military systems, strategic planning, public administration, persuasion, and cyber offense. Frontier AI companies or governments could run millions of copies of these systems, each operating orders of magnitude faster than the human brain, 24/7. An organization with unilateral control of one of these systems could, even without broad popular or military support, seize control of a state.

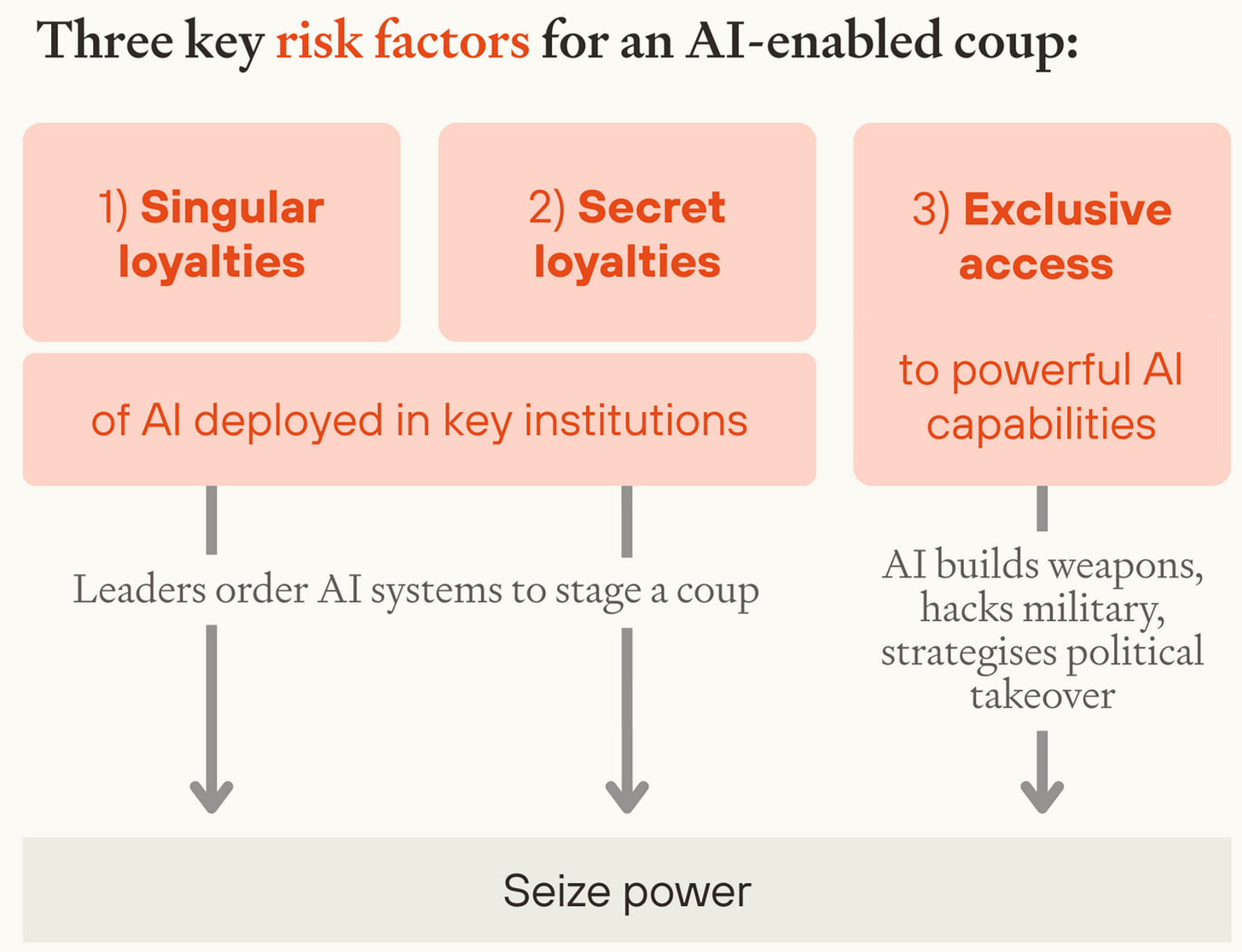

Risk factors for an AI-enabled coup. The report identifies three key risk factors that could increase the likelihood of an AI-enabled coup.

First, AI systems could be overtly designed with singular loyalty to specific leaders, bypassing traditional chains of command and enabling those leaders to act unilaterally.

Second, AI systems could be designed with secret loyalties that are undetectable until it is too late, allowing them to assist coup plotters covertly.

Third, exclusive access to the most powerful, coup-enabling AI capabilities could become concentrated within a small number of AI development projects or even among a few key individuals within those projects.

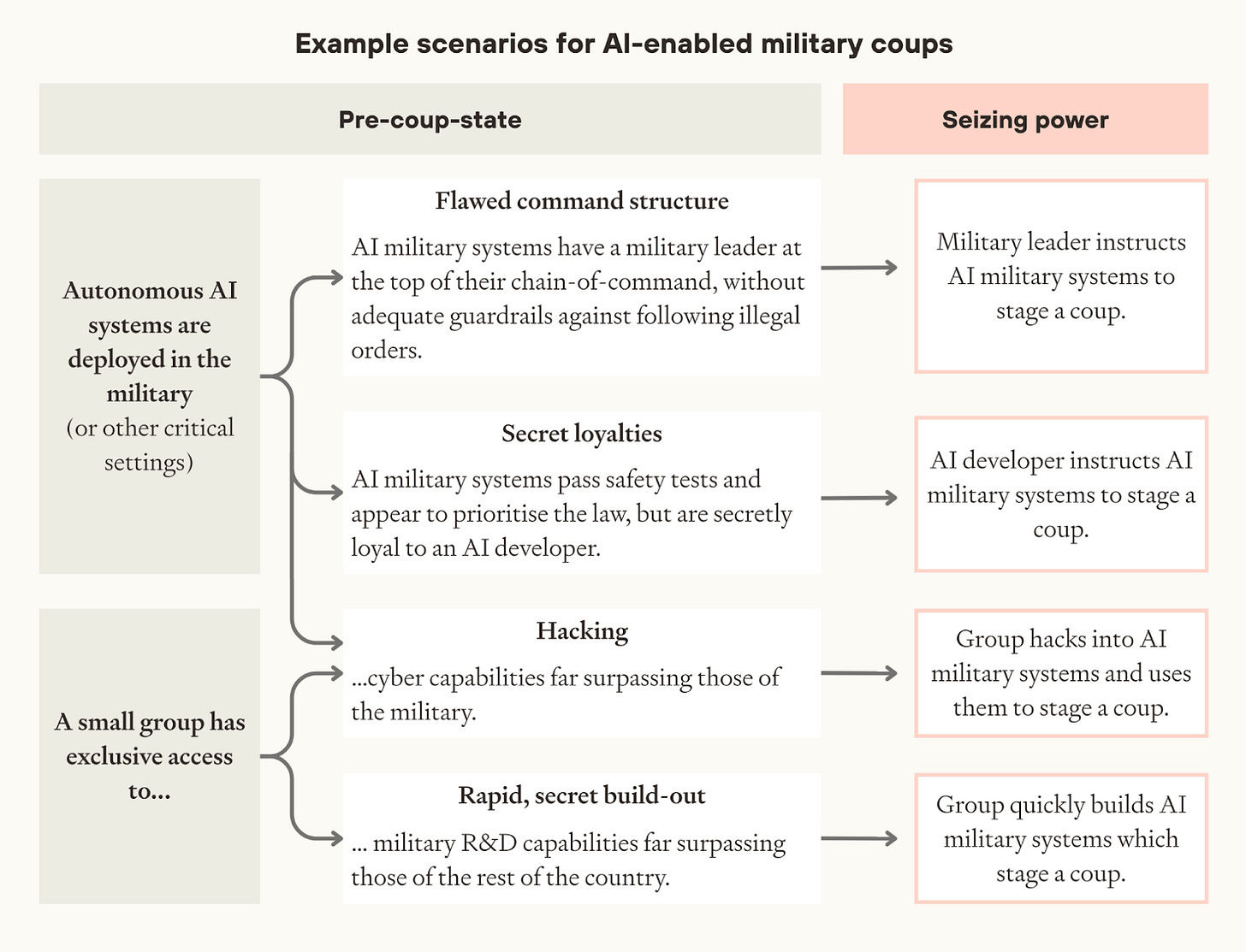

Concrete paths to an AI enabled coup. The report outlines two families of potential scenarios for how an AI-enabled coup could occur.

One path involves the misuse of widely deployed military AI systems. Staging a coup currently requires support from human soldiers, but a small group that controls autonomous, advanced military AI systems could stage a military coup on their own.

Another path involves AI assisting in the processes leading to a democratic backslide—for example, by expanding state authority, replacing bureaucrats with loyal AI systems, targeted propaganda, and information control.

Mitigations. To counter these risks, the report proposes several mitigation strategies focusing on establishing clear rules and technical enforcement mechanisms.

Establishing these rules will involve creating robust oversight bodies, clear legal frameworks defining legitimate AI use, and promoting transparency around AI capabilities and deployments.

Technical measures include developing robust guardrails to prevent misuse, implementing strong cybersecurity for AI systems, and designing AI command structures that require consensus or multi-party authorization for critical actions.

The report concludes that the risk of AI-enabled coups is alarmingly high. Unfortunately, this fact has the potential to become a self-fulfilling prophecy—even actors with good intentions might be tempted to seize power in order to prevent “bad actors” from doing so first.

However, there’s also reason to believe mitigation measures will be effective and politically tractable. Behind the ‘veil of ignorance’ as to who will be in a position of power to take advantage of coup-enabling AI, it’s in everyone’s best interest to make sure no one can.

Other news

Industry

OpenAI released o3 and o4-mini, two new frontier reasoning models.

Google released the model card for Gemini 2.5 Pro, though with minimal details about safety testing.

Several Epoch AI employees left to found a startup for automating jobs to bring about explosive growth from AI “as soon as possible.” This departure follows the revelation in January that OpenAI owns Epoch’s FrontierMath Benchmark, unbeknownst to dataset contributors.

TSMC says that it will eventually produce 30% of sub 2-nm chips in its US plants.

Government

The US Government informed Nvidia that it will need a license to export H20 chips to China.

The White House OSTP hired Dean Ball as Senior Policy Advisor on AI and Emerging Technology.

White House ‘AI and Crypto Czar’ David Sack argued that BIS should receive more funding to close AI export control loopholes.

US Senator Mike Rounds introduced legislation to create a whistleblower incentive program at BIS to better detect AI chip smuggling to China.

AI Frontiers

Kevin Frazier and Graham Hardig argue that we need a new kind of insurance for AI job loss.

Stephen Casper and Laura Hiscott argue that corporate capture of AI research—echoing the days of Big Tobacco—thwarts sensible policymaking.

Dan Hendrycks and Laura Hiscott discuss the risk factors behind AI biothreats and the importance of friction.

See also: CAIS website, X account for CAIS, our paper on superintelligence strategy, our AI safety course, and AI Frontiers, a new platform for expert commentary and analysis.

The self fulfilling prophecy part around coup risk wouldn’t really work right? I’m talking about how good actors would stage a coup to stop bad actors from doing the same, but seizing power without supply chain and power disruptions seems hard and if you can still run your AIs with lower power, so can your adversaries so your coup wouldn’t really grant lasting power