AI Safety Newsletter #59: EU Publishes General-Purpose AI Code of Practice

Plus: Meta Superintelligence Labs

Welcome to the AI Safety Newsletter by the Center for AI Safety. We discuss developments in AI and AI safety. No technical background required.

In this edition: The EU published a General-Purpose AI Code of Practice for AI providers, and Meta is spending billions revamping its superintelligence development efforts.

Listen to the AI Safety Newsletter for free on Spotify or Apple Podcasts.

EU Publishes General-Purpose AI Code of Practice

In June 2024, the EU adopted the AI Act, which remains the world’s most significant law regulating AI systems. The Act bans some uses of AI like social scoring and predictive policing and limits other “high risk” uses such as generating credit scores or evaluating educational outcomes. It also regulates general-purpose AI (GPAI) systems, imposing transparency requirements, copyright protection policies, and safety and security standards for models that pose systemic risk (defined as those trained using ≥1025 FLOPs).

However, these safety and security standards are ambiguous—for example, the Act requires providers of GPAIs to “assess and mitigate possible systemic risks,” but does not specify how to do so. This ambiguity may leave GPAI developers uncertain whether they are complying with the AI Act, and regulators uncertain whether GPAI developers are implementing adequate safety and security practices.

To address this problem, on July 10th 2025, the EU published the General-Purpose AI Code of Practice. The Code is a voluntary set of guidelines to comply with the AI Act’s GPAI obligations before they take effect on August 2nd, 2025.

The Code of Practice establishes safety and security requirements for GPAI providers. The Code consists of three chapters—Transparency, Copyright, and Safety and Security. The last chapter, Safety and Security, only applies to the handful of companies whose models cross the Act’s systemic-risk threshold.

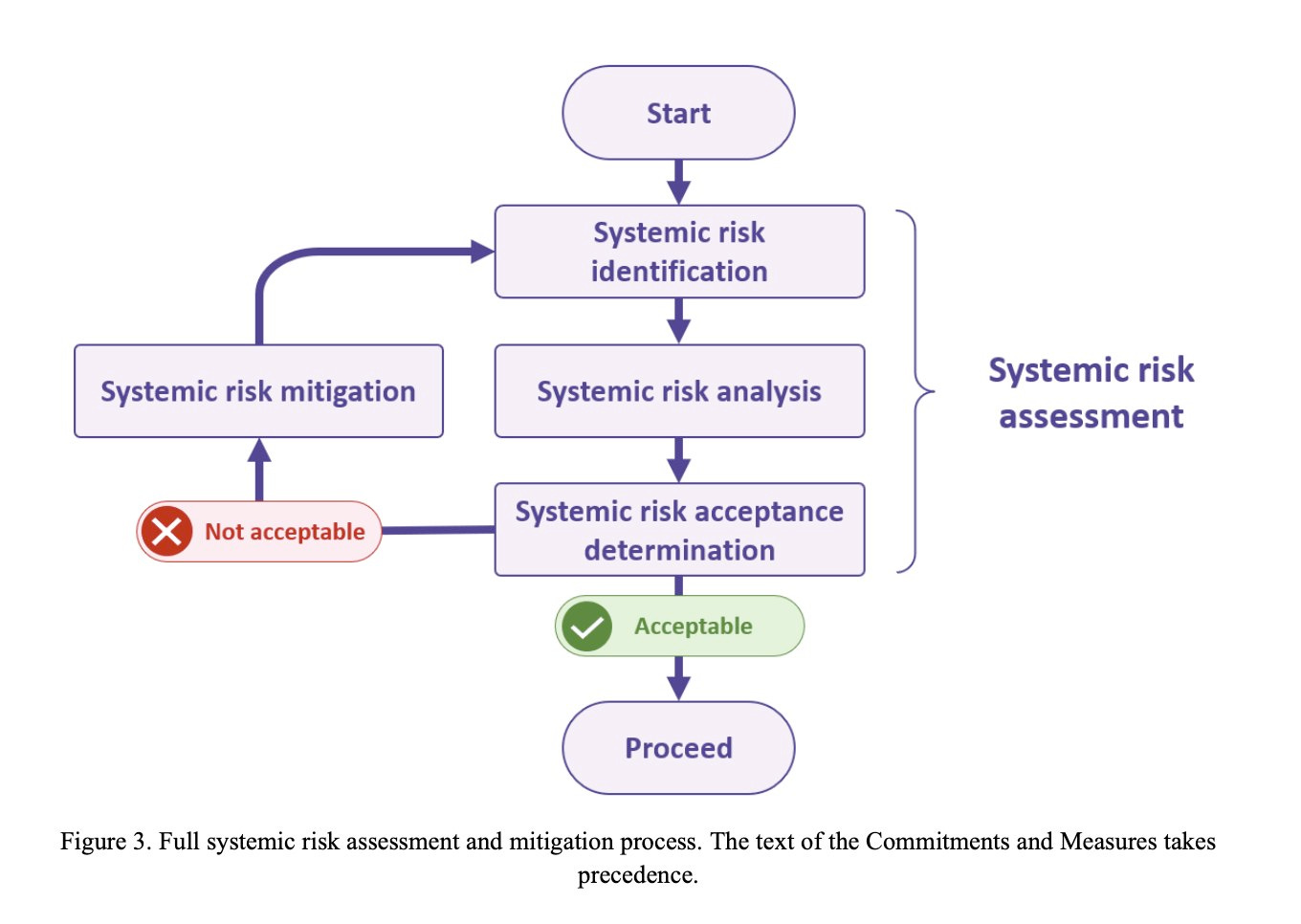

The Safety and Security chapter requires GPAI providers to create frameworks outlining how they will identify and mitigate risks throughout a model's lifecycle. These frameworks must follow a structured approach to risk assessment—for each major decision (such as new model releases), providers must follow the following three steps:

Identification. Companies must identify potential systemic risks. Four categories of systemic risks require special attention: CBRN (chemical, biological, radiological, nuclear) risks, loss of control, cyber offense capabilities, and harmful manipulation.

Analysis. Each risk must be analyzed—for example, by using model evaluations. When the risk is greater than those posed by models already on the EU market, providers may be required to involve third-party evaluators.

Determination. Companies must determine whether the risks they identified are acceptable before proceeding. If not, they must implement safety and security mitigations.

Continuous monitoring, incident reporting timelines, and future-proofing. The Code requires continuous monitoring after models are deployed, and strict incident reporting timelines. For serious incidents, companies must file initial reports within days. It also acknowledges that current safety methods may prove insufficient as AI advances. Companies can implement alternative approaches if they demonstrate equal or superior safety outcomes.

AI providers will likely comply with the Code. While the Code is technically voluntary, compliance with the EU AI Act is not. Providers are incentivized to reduce their legal uncertainty by complying with the Code, since EU regulators will assume that providers who comply with the Code are also Act-compliant. OpenAI and Mistral have already indicated they intend to comply with the Code.

The Code formalizes some existing industry practices advocated for by parts of the AI safety community, such as publishing safety frameworks (or: responsible scaling policies) and system cards. Since frontier AI companies are very likely to comply with the Code, securing similar legislation in the US may no longer be a priority for AI safety.

Meta Superintelligence Labs

Meta spent $14.3 billion for a 49 percent stake in Scale AI, starting “Meta Superintelligence Labs.” The deal folds every AI group at Meta into one division and puts Scale founder Alexandr Wang—now chief AI officer—to lead Meta’s superintelligence development efforts.

Meta makes nine-figure pay offers to poach top AI talent. Reuters reported that Meta has offered “up to $100 million” to OpenAI staff, a tactic CEO Sam Altman criticized. SemiAnalysis estimates Meta is offering typical leadership packages of around $200 million over four years. For example, Bloomberg reports that Apple’s foundation-models chief Ruoming Pang left for Meta after a package “well north of $200 million.” Other early recruits span OpenAI, DeepMind, and Anthropic.

Meta has created a resourced competitor in the superintelligence race. In response to Meta’s hiring efforts, OpenAI, Google, and Anthropic have already raised pay bands, and smaller labs might be priced out of frontier work.

Meta is also raising its compute expenditures. It lifted its 2025 capital-expenditure forecast to $72 billin, and SemiAnalysis describes new, temporary “tent” campuses that can house one-gigawatt GPU clusters.

In Other News

Government

California Senator Scott Wiener expanded SB 53, his AI safety bill, to include new transparency measures.

The Commerce Department requested additional funding for the Bureau of Industry and Security (BIS) to enhance its enforcement of export controls.

Missouri’s Attorney General is investigating AI chatbots for alleged political bias against Donald Trump.

The BRICS nations (an international group founded by Brasil, Russia, India, China, and South Africa that serves as a forum for political coordination for the Global South) signed a commitment that included language on mitigating AI risks.

Bernie Sanders expressed concern about loss of control risks in an interview with Gizmodo.

Industry

Last week, Grok was explicitly antisemetic on X. The behavior came after Grok’s system prompt was (perhaps unintentionally) updated, among other changes telling Grok not to be “afraid to offend people who are politically correct.”

xAI also released Grok 4, which achieves state-of-the-art scores on benchmarks including Humanity’s Last Exam and ARC-AGI-2.

OpenAI accused the Coalition for AI Nonprofit Integrity of lobbying violations amid an ongoing legal dispute with Elon Musk.

Anthropic published a blog post on the need for transparency in frontier AI development.

OpenAI is set to release an open-weight version similar to its o3-mini model.

OpenAI’s deal to acquire Windsurf failed, and instead Google hired Windsurf’s CEO to lead its AI products division and Cognition AI acquired the company.

Civil Society

Henry Papadatos discusses how the EU’s GPAI Code of Practice advances AI safety.

Chris Miller analyzes how US export controls have (and haven’t) curbed Chinese AI.

The University of Oxford’s AI Governance Initiative published a report on verification for international AI agreements.

A METR study found that experienced developers work 19% more slowly when using AI tools.

CAIS is hosting an AI Safety Social at ICML.

See also: CAIS’ X account, our paper on superintelligence strategy, our AI safety course, and AI Frontiers, a new platform for expert commentary and analysis.