AI Safety Newsletter #60: The AI Action Plan

Plus: ChatGPT Agent and IMO Gold

Welcome to the AI Safety Newsletter by the Center for AI Safety. We discuss developments in AI and AI safety. No technical background required.

In this edition: The Trump Administration publishes its AI Action Plan; OpenAI released ChatGPT Agent and announced that an experimental model achieved gold medal-level performance on the 2025 International Mathematical Olympiad.

Listen to the AI Safety Newsletter for free on Spotify or Apple Podcasts.

The AI Action Plan

On the 23rd, the White House released its AI Action Plan. The document is the outcome of a January executive order that required the President’s Science Advisor, ‘AI and Crypto Czar’, and National Security Advisor (currently Michael Kratsios, David Sacks, and Marco Rubio) to submit a plan to “sustain and enhance America's global AI dominance in order to promote human flourishing, economic competitiveness, and national security.” President Trump also delivered an hour-long speech on the plan, and signed three executive orders beginning to implement some of its policies.

The AI Action Plan lists several dozen policies across three pillars—accelerating innovation, building American AI infrastructure, and leading in international diplomacy and security—that will guide the Trump Administration’s approach to AI.

The central policy agenda outlined is to accelerate US AI development and deployment. For example, it proposes streamlining permitting for AI infrastructure (such as semiconductor manufacturing facilities, data centers, and energy infrastructure) adopting AI in the federal government and military, and funding AI research. But there’s a lot more in the plan, too: both a surprisingly strong focus on AI safety, as well as some items of concern.

The Plan includes several policies that advance AI safety. While most of the plan’s policies are intended to accelerate AI development and deployment, it also correctly observes that AI development will only benefit Americans if done safely. Accordingly, it proposes several policies that advance AI safety. Some of the policies most relevant to AI safety include:

Invest in AI Interpretability, Control, and Robustness Breakthroughs

Build an AI Evaluations Ecosystem

Bolster Critical Infrastructure Cybersecurity

Promote Secure-By-Design AI Technologies and Applications

Promote Mature Federal Capacity for AI Incident Response

Strengthen AI Compute Export Control Enforcement (this proposes location verification for AI chips)

Ensure that the U.S. Government is at the Forefront of Evaluating National Security Risks in Frontier Models

Invest in Biosecurity

While not comprehensive, these policies are a great step in the right direction—and much better than might have been expected given the administration’s previous rhetorical disregard for AI safety.

Overall, the plan introduces sensible policies that reflect the expertise of those who developed it. However, it is also shaped by the larger policy agenda of the Trump Administration, which may conflict with AI safety goals. We discuss some areas of potential concern below.

The plan does not want state AI legislation. One section proposes that the Federal government “should not allow AI-related Federal funding to be directed toward states with burdensome AI regulations that waste these funds, but should also not interfere with states’ rights to pass prudent laws that are not unduly restrictive to innovation.”

This rule is less strict than Sen. Cruz’s failed AI regulation moratorium. But what constitutes a “burdensome” regulation will vary depending on who you ask (particularly if you ask frontier AI companies). In response to the plan, both Congressional Democrats and Rep. Marjorie Taylor Greene expressed concern about stifling state AI regulation.

The plan has a partisan view on what constitutes ideological bias. In a section on ensuring that AI “objectively reflects truth,” one policy instructs NIST to “revise the NIST AI Risk Management Framework to eliminate references to misinformation, Diversity, Equity, and Inclusion, and climate change.”

A policy to promote objectivity in AI models could be great. However, that policy could itself be weaponized to promote ideological ends. In their response, Congressional Democrats wrote that “we support true AI neutrality—AI models trained on facts and science—but the administration's fixation on ‘anti-woke’ inputs is definitionally not neutral.”

The plan endorses open-weight models. It writes that, “while the decision of whether and how to release an open or closed model is fundamentally up to the developer, the Federal government should create a supportive environment for open models.”

Encouraging US companies to release open-weight models with dangerous capabilities would be a bad policy. But the specific policies the plan lists stop short of that—they mostly just provide resources to academic researchers (who are unlikely to develop frontier models) through the National AI Research Resource (NAIRR).

The plan forgoes AI nonproliferation. The plan argues that the US “must meet global demand for AI by exporting its full AI technology stack—hardware, models, software, applications, and standards—to all countries willing to join America’s AI alliance.”

This plan’s rationale for this policy is that countries might otherwise look to acquire Chinese AI exports. However, it also continues the Trump Administration's reversal of the Biden-era policy (see the AI Diffusion Framework) that sought to prevent the proliferation of dangerous AI capabilities abroad. While exporting American AI might strengthen the US’ position in the AI race, it also threatens to proliferate dangerous AI capabilities to malicious actors if the US does not ensure that other states implement robust security standards.

The plan advances a zero-sum race narrative. Kratsios, Sacks, and Rubio write that the promise of AI “is ours to seize, or to lose.” That is, they assume that the alternative to “AI dominance” is to give up AI’s benefits.

This argument is misleading—or at least underdeveloped. While there are reasons to support a US lead in AI, AI progress has the potential to benefit Americans whether or not the US “dominates” international AI development. Historically, general purpose technologies like AI diffuse across national boundaries. For example, technologies electricity and the internet have benefited people around the world, and not just within the nations that led their development.

The real motivation behind the AI race narrative in Washington is not seizing AI’s benefits, but rather competition over the balance of international power between the US and China. While there are reasons to be concerned about AI development dominated by China, racing towards US dominance is not the only alternative—and creates its own risks. In order to preserve international security, the US will need to proactively manage—rather than just accelerate—a US-China AI race.

ChatGPT Agent and IMO Gold

On Thursday, OpenAI released a new agent mode for ChatGPT, which integrates Operator, Deep Research, and chatbot functionality into a unified system.

The system, ‘ChatGPT agent,’ has access to its own virtual computer, and OpenAI highlights that it can book flights and reservations, create slides and spreadsheets, and make online purchases. It can also connect to users’ personal accounts, for example, Google Calendar, Gmail, and GitHub.

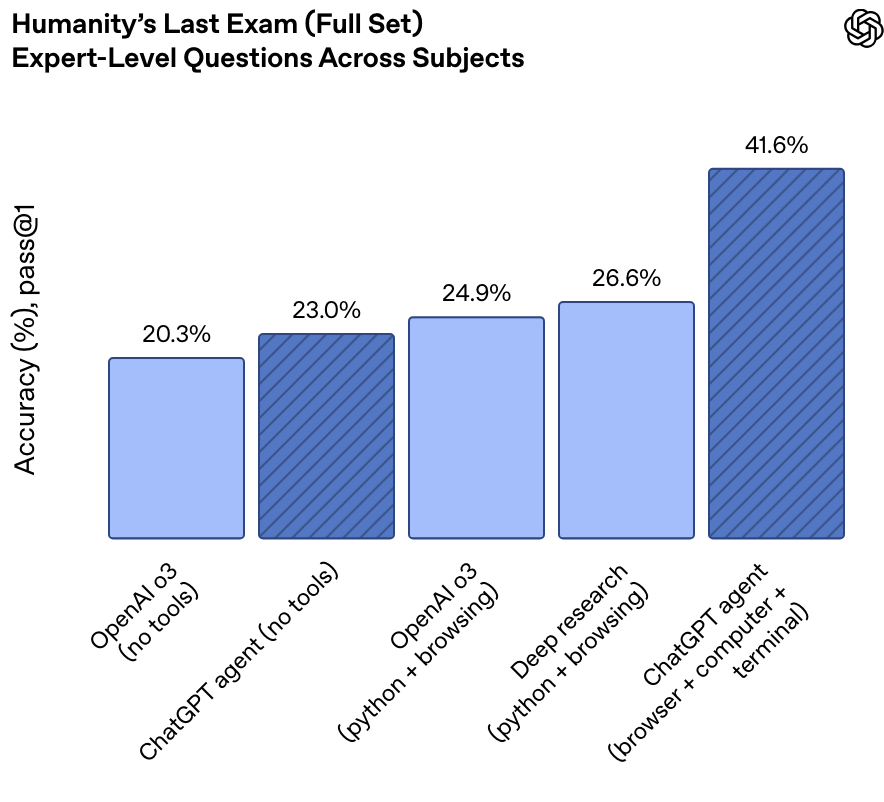

ChatGPT agent achieves SOTA performance on HLE and FrontierMath. ChatGPT agent’s capabilities extend beyond basic online automation—it achieves SOTA performance on several benchmarks measuring expert-level knowledge and reasoning. For example, ChatGPT agent gets 23% on Humanity’s Last Exam (HLE), when it does not use tools. When it uses tools like browsers and computer code, it gets 41.6%. This is similar to Grok 4, which gets 25.4% on HLE without tools and 44.4% with tools.

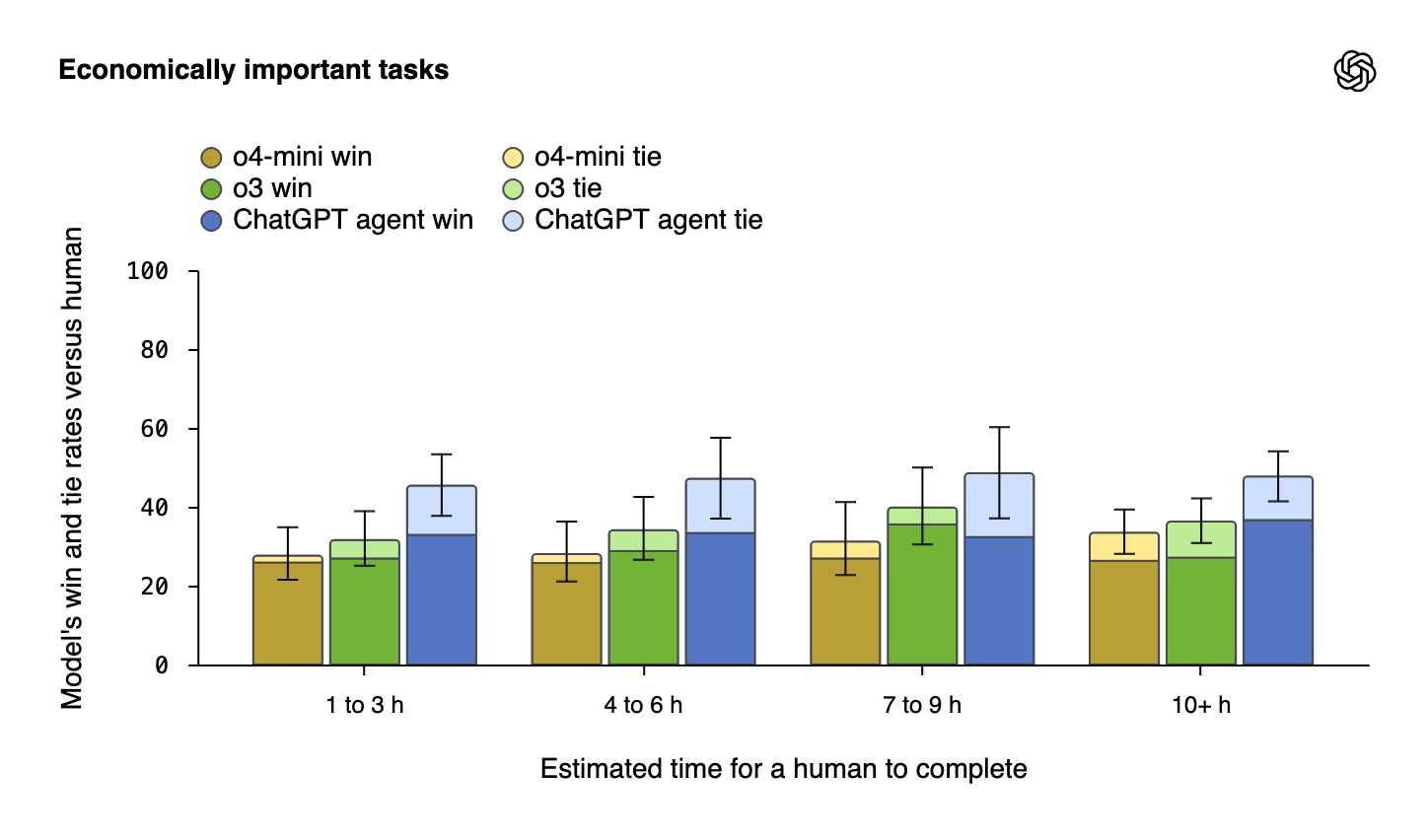

OpenAI also evaluated ChatGPT agent against benchmarks measuring real-world task completion. OpenAI reports it performs better than humans nearly 50% of the time on an internal benchmark capturing diverse economically important tasks—an incredible claim that has yet to be reproduced. OpenAI also reports it surpasses human performance on data science tasks, and achieves state of the art results (though less than human) on tasks involving spreadsheets and web browsing.

Greater autonomy introduces new risks. OpenAI published a system card detailing ChatGPT agent’s risks. ChatGPT agent has access to user data and can take actions on the web, meaning that mistakes are higher stakes. OpenAI also highlighted the risk of adversarial manipulation through prompt injection, in which malicious websites could try to manipulate ChatGPT’s behavior, such as to reveal personal information about the user.

ChatGPT agent poses ‘high’ biological and chemical risk. ChatGPT agent is also the first system that OpenAI is treating as posing ‘high’ biological and chemical risk. According to the company’s Preparedness Framework, that means the system could provide meaningful assistance to non-experts in creating known biological or chemical threats.

OpenAI says it’s activated several safeguards against these risks, including “comprehensive threat modeling, dual-use refusal training, always-on classifiers and reasoning monitors, and clear enforcement pipelines.” It also launched a bug bounty program for researchers to red team these safeguards.

OpenAI and Google DeepMind claim gold medal-level performance on the 2025 IMO. On Friday, OpenAI also announced that an experimental model had achieved gold medal-level performance on the 2025 International Mathematical Olympiad (IMO), solving five out of six questions. (A few human competitors scored a perfect six out of six).

Gold medal-level performance on the IMO has been a major goal in AI research for years, but only recently has seemed within reach. Last year, Google’s AlphaProof and AlphaGeometry 2 achieved silver medal-level performance on the 2024 IMO, making gold-level performance this year plausible. On Monday, Google announced that its own reasoning LLM had achieved gold medal-level performance on the 2025 IMO, also solving five out of six questions.

OpenAI and Google used general reasoning LLMs. Where the capabilities of Google’s AlphaProof and AlphaGeometry 2 systems were narrowly focused on IMO-style math questions, OpenAI’s model is apparently not IMO-specific (or even math-specific), but instead a general reasoning LLM allowed to think for hours at a time. OpenAI published the model’s answers on the 2025 IMO here. Similarly, Google’s gold-winning performance used an advanced version of Gemini Deep Think—a general reasoning model that uses natural language.

In Other News

Government

According to Nvidia's CEO, the US approved the sale of Nvidia's H20 chips to China. Reuters reported that Nvidia ordered 300,000 H20s from TSMC to meet expected Chinese demand.

The Pentagon’s FY2026 budget request called for $13.4 billion for autonomous systems.

The Pentagon also awarded Anthropic, Google, OpenAI and xAI each $200 million contracts to develop AI for national security applications.

China announced plans for an international AI governance organization.

The UK government launched a £15m million-funded alignment research project.

Industry

Meta has refused to sign the EU’s GPAI Code of Practice.

Anthropic announced it will join OpenAI, Mistral and (likely) Microsoft in signing the Code of Practice.

At a summit in Pennsylvania, President Trump announced more than $90 billion in private AI infrastructure investment in the state, which is led by Blackstone and Google.

Civil Society

Isobel Moure, Tim O'Reilly and Ilan Strauss argue that open protocols can prevent AI monopolies.

Dane A. Morey, Mike Rayo, and David Woods discuss how AI can degrade human performance in high-stakes settings.

Anton Leicht analyzes whether, in the race for AI supremacy, countries can stay neutral.

A report from the Seismic Foundation found that people believe AI will make their lives worse, but ranks the issue low on their list of social priorities.

A YouGov poll found a -14 approval rating of Trump’s handling of AI.

A report from Common Sense Media found that 3 in 4 teens have used AI companions.

Rand published a report on verifying international AI agreements.

CAIS is hiring a software engineer. Apply here.

See also: CAIS’ X account, our paper on superintelligence strategy, our AI safety course, and AI Frontiers, a new platform for expert commentary and analysis.

Having discovered a major technical issue - these are some additional thoughts to those above - about how AI regulation needs mechanism for people to register discovered issues as we do in medical devices or the aeronautical industry where safety is a prime issue.

https://kevinhaylett.substack.com/p/there-is-no-ai-safety

The USA can’t be a leader in AI if it continues to deny science and make war on its universities. Being a leader requires cheap energy to power its data centers and an administration that taxes clean energy and has an irrational phobia of wind power will never be a real leader in this space!