AI Safety Newsletter #65: Measuring Automation and Superintelligence Moratorium Letter

Welcome to the AI Safety Newsletter by the Center for AI Safety. We discuss developments in AI and AI safety. No technical background required.

In this edition: A new benchmark measures AI automation; 50,000 people, including top AI scientists, sign an open letter calling for a superintelligence moratorium.

Listen to the AI Safety Newsletter for free on Spotify or Apple Podcasts.

CAIS and Scale AI release Remote Labor Index

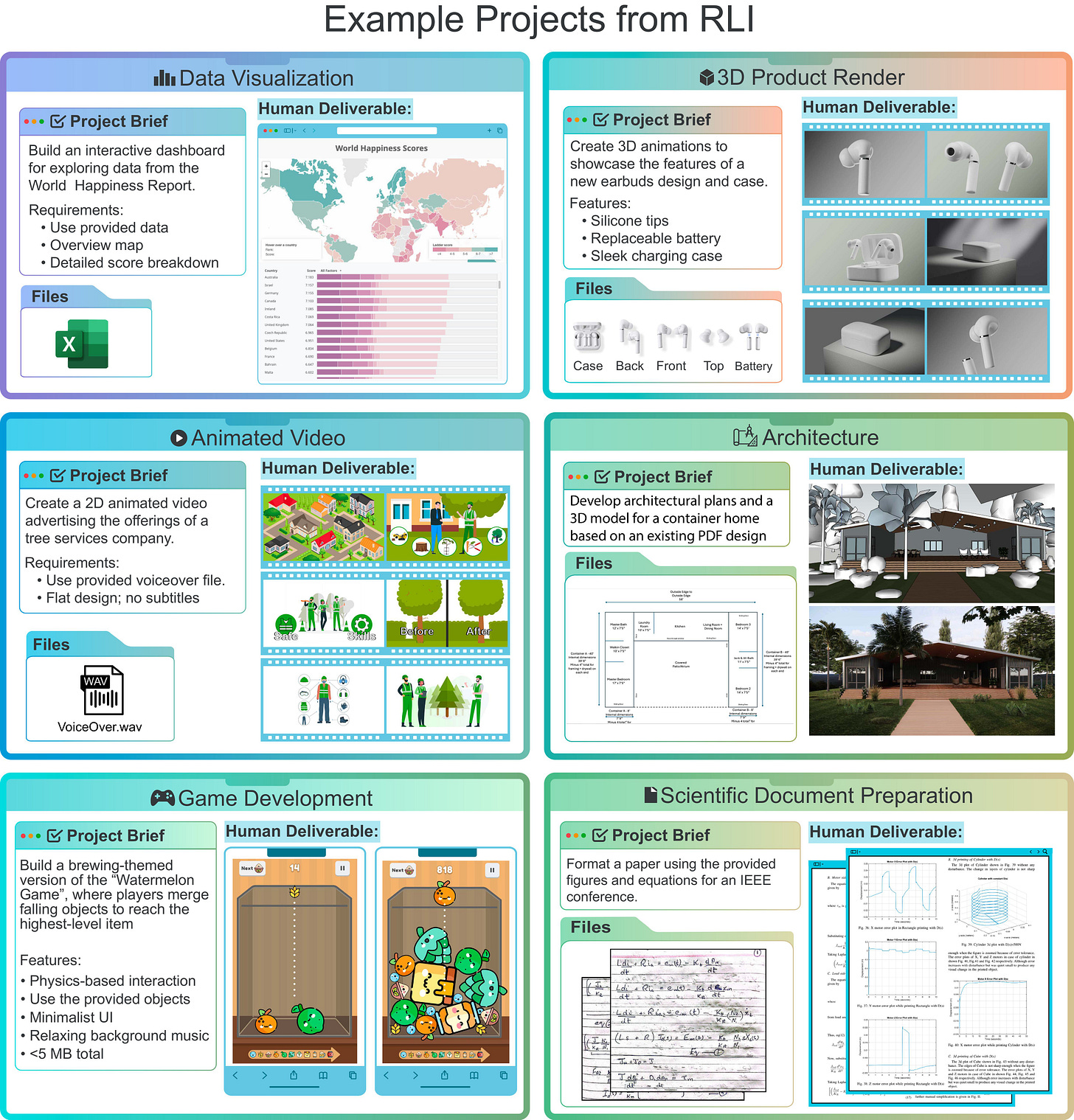

The Center for AI Safety (CAIS) and Scale AI have released the Remote Labor Index (RLI), which tests whether AIs can automate a wide array of real computer work projects. RLI is intended to inform policy, AI research, and businesses about the effects of automation as AI continues to advance.

RLI is the first benchmark of its kind. Previous AI benchmarks measure AIs on their intelligence and their abilities on isolated and specialized tasks, such as basic web browsing or coding. While these benchmarks measure useful capabilities, they don’t measure how AIs can affect the economy. RLI is the first benchmark to collect computer-based work projects from the real economy, containing work from many different professions, such as architecture, product design, video game development, and design.

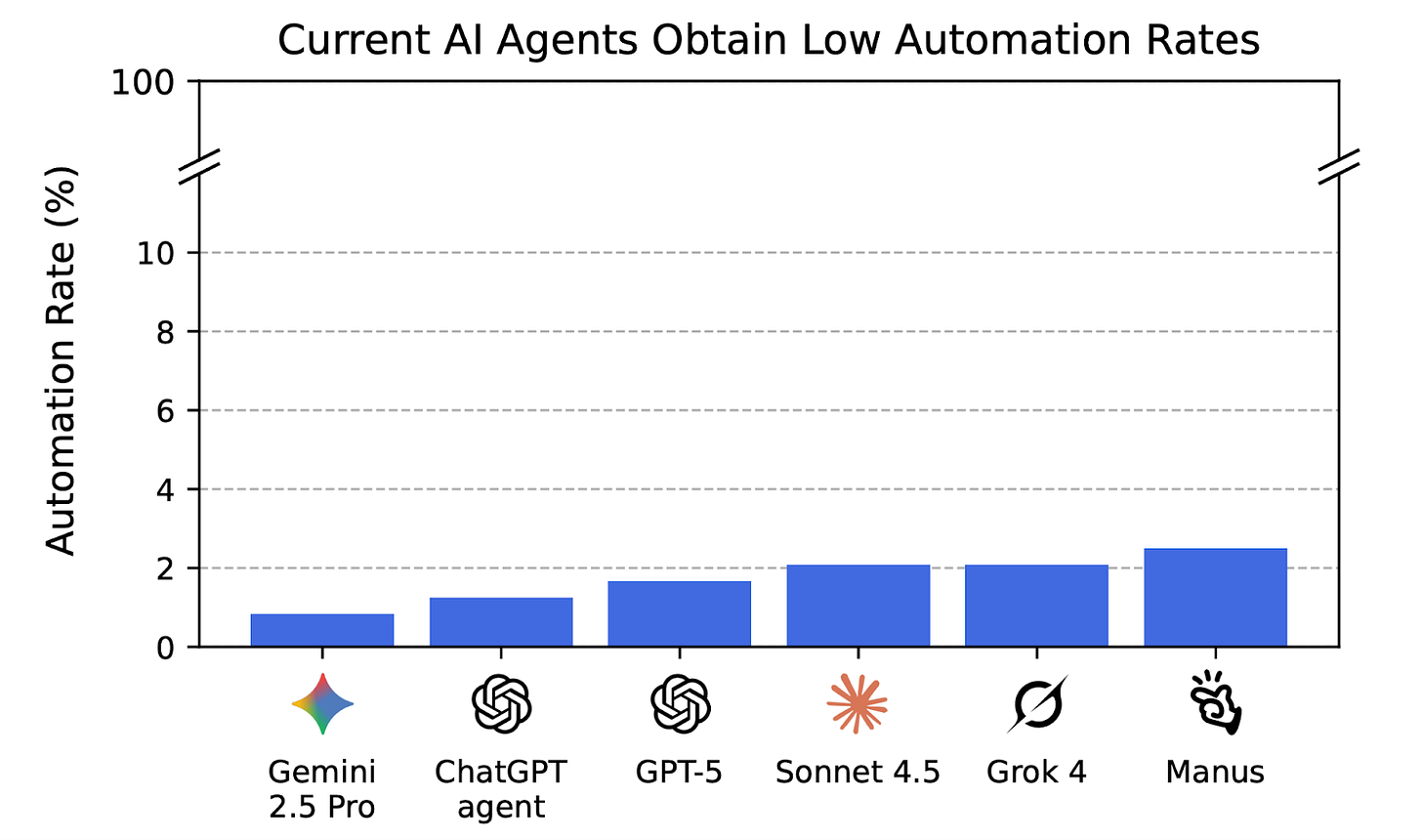

Current AI agents fully automate very few work projects, but are improving. AIs score highly on existing narrow benchmarks, but RLI shows that there is a gap in the existing measurements: AIs cannot currently automate most economically valuable work, with the most capable AI agent only automating 2.5% of work projects on RLI, however there are signs of steady improvement over time.

Bipartisan Coalition for Superintelligence Moratorium

The Future of Life Institute (FLI) introduced an open letter with over 50,000 signatories endorsing the following text:

We call for a prohibition on the development of superintelligence, not lifted before there is

broad scientific consensus that it will be done safely and controllably, and

strong public buy-in.

The signatories form the broadest group to sign an open letter about AI safety in history. Among the signatories are five Nobel laureates, the two most cited scientists of all time, religious leaders, and major figures in public and political life from both the left and the right.

This statement builds on previous open letters about AI risks, such as the open letter from CAIS in 2023 acknowledging AI extinction risks, as well as the previous open letter from FLI calling for an AI training pause. While the CAIS letter was intended to establish a consensus about risks from AI and the first FLI letter was calling for a specific policy on a clear time frame, the broad coalition behind the new FLI letter and its associated polling creates a powerful consensus opinion about the risks of AI while also calling for action.

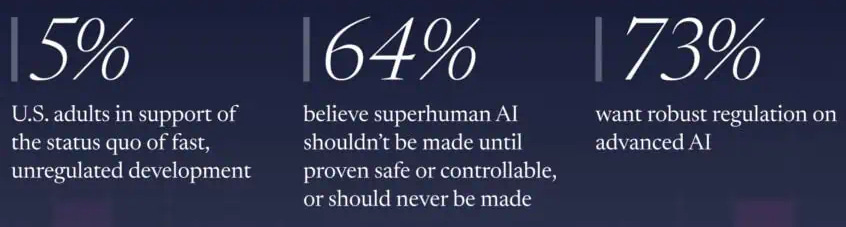

In the past, critics of AI safety have dismissed the concept of superintelligence and AI risks due to lack of mainline scientific and public support. The breadth of people who have signed this open letter demonstrates that opinions are changing on the matter. This is confirmed by polling released concurrently to the open letter, showing that approximately 2 in 3 US adults believe that superintelligence shouldn’t be created, at least until it is proven safe and controllable.

A broad range of news outlets have covered the statement. Dean Ball and others push back on the statement on X, pointing out the lack of specific details on how to implement a moratorium and the difficulty of doing so. Scott Alexander and others respond defending the value of statements of consensus as a tool for motivating developing specific details of AI safety policy.

In Other News

Government

Senator Jim Banks introduced the GAIN AI act, which would give US companies and individuals first priority to buy AI chips from US companies and deprioritize foreign buyers.

State legislators Alex Bores (behind the RAISE act) and Scott Wiener (behind SB 1047 and SB 53) have both announced runs for US congress.

Industry

You can now officially order a home robot for $500/mo.

OpenAI announces corporate restructuring into a public benefit corporation and some new terms in their relationship with Microsoft.

Anthropic announces an expansion into 1 million Google TPUs, worth tens of billions of dollars.

OpenAI’s Sora app was briefly the most downloaded app on the app store.

Civil Society

A series of billboards advertising “Replacement AI” drew attention in San Francisco last week.

Bruce Schneier and Nathan E. Sanders discuss AIs’ effect on representative democracy.

A forecast based on the definition of AGI proposed last week argues for a 50% chance that AGI will be released by the end of 2028 and an 80% chance that it is released by the end of 2030.

See also: CAIS’ X account, our paper on superintelligence strategy, our AI safety course, and AI Frontiers, a new platform for expert commentary and analysis.

Proud to be among the 1000 first people to sign the moratorium, it finally feels like we are most on the same boat